Participatory AI Approaches in AI Development and Governance

Vidhi Centre for Legal Policy in collaboration with the Centre for Responsible AI at IIT Madras

This two-part research study prepared by the Vidhi Centre for Legal Policy (Vidhi) in collaboration with the Centre for Responsible AI (CeRAI) at IIT Madras outlines a participatory approach to artificial intelligence (AI) development and governance. A participatory approach emphasises the inclusion of relevant stakeholders in shaping how AI systems are designed, deployed and governed. By integrating all relevant parties into the decision-making process, this approach empowers affected users to have a say in the existence and operation of AI-based systems, leading to more equitable and responsible outcomes.

Why is a participatory approach to AI development important?

AI technologies affect behaviours and make decisions that may affect people in their day to day lives. While they offer significant benefits by improving accuracy and efficiency, they also have the potential to cause harms, both to individuals and on a societal level.

A relatively cost-efficient way of mitigating these harms is to consult affected stakeholders before the deployment of given AI systems. In this context, the benefits of a participatory approach lie in enhancing the fairness of the process – ensuring that stakeholders’ interests and consideration are accounted for prior to the roll out of any AI-based system that will impact them. This also helps enhance the efficiency and accuracy of such AI systems.

For example, the healthcare sector is increasingly exploring AI solutions such as large language models (LLMs) – which are complex deep learning models trained on extensive textual data. They use self-supervised learning methods to identify statistical associations and produce text tokens in response to the inputs they receive. LLMs are used to generate patient summaries, aid diagnosis and operate chatbots. Implementing LLMs effectively requires a participatory approach, involving key stakeholders like doctors, patients, and legal teams. Such an approach can be useful to address sector-specific challenges including access to reliable data for training algorithms, addressing legal and ethical concerns, and ensuring decision-making transparency, thereby enhancing technology acceptance and reducing biases in healthcare applications.

Operationalising the PAI Approach

Beyond establishing the need for a participatory approach, the papers argue that a participatory approach goes beyond discussion with stakeholders. It provides a framework for operationalising a participatory approach by addressing the following questions – Who must be consulted? How should the collective feedback of identified stakeholders be integrated? How can complicated concepts of machine learning be explained to stakeholders?

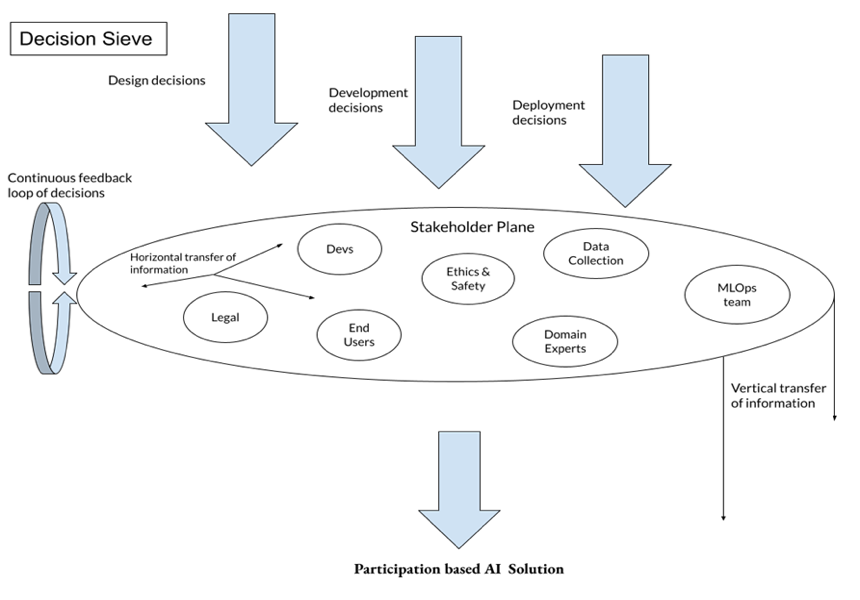

To implement a participatory AI approach, these papers propose a ‘decision sieve’ (Figure 1) – which broadly involves the following steps”:

- Firstly, AI software developers should have a clear plan for the program development. This involves mapping out processes like data collection, data sorting and annotation, model training and subsequent testing.

- Secondly, developers should identify which steps in their broad plan require participatory input and determine the relevant stakeholders for each step along with the relevant information that can be provided by such stakeholders.

- Thirdly, once input is gathered, it should be consolidated through deliberation or voting, depending on the nature of the decision and any constraints the developer faces.

The input, thus obtained, provides information that was not previously available to the developers. This enhances the efficacy of the AI by making the model more granular and contextualised. Consequently, this improvement makes the process fairer, increasing the product’s legitimacy and acceptability.

Fig 1. A model decision sieve which can channelise participatory input received from multiple stakeholders.

These steps are detailed in the second paper in the contexts of LLMs in healthcare and Facial Recognition Technology in policing.